ChatGPT Says It’s Not an Author

ChatGPT may not be the problem — our tendency to assign consciousness to inanimate objects may be

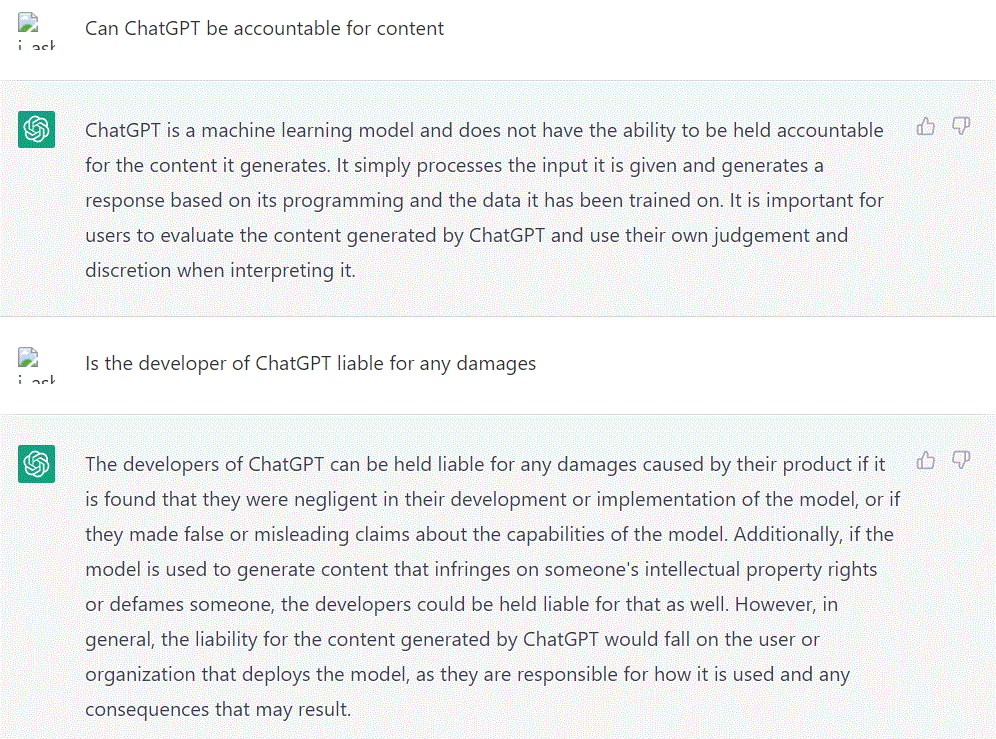

Both Scopus and ORCID have authorship entries for ChatGPT. There have even been papers which list ChatGPT as an author. But ask ChatGPT whether it’s possibly an author, and it says no:

No, ChatGPT is a language model and does not have the ability to fulfill the ICMJE criteria for authorship, which include:

1. Substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work.

2. Drafting the work or revising it critically for important intellectual content.

3. Final approval of the version to be published.

4. Agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

ChatGPT can assist in the drafting or revising of a work, but it cannot fulfill all of the ICMJE criteria for authorship.

So, why are we allowing this AI agent to create authorship identities in our systems, and to assert authorship in submissions?

Given evidence that ChatGPT can be an unreliable source, there’s even more reason for concern in allowing it to help in drafting or revising works.

Authors may actually be creating new liabilities for themselves by playing fast and loose with the definition of “author” relative to an AI agent — if you believe ChatGPT:

Can we trust authors? Now that they have a new way to cut corners, probably less than ever.