Data Missing, Study May Be Useless

Claiming journals do nothing to preprints, researchers don't find, keep key data

A PLOS Biology study making the rounds asserts to have found that preprints differ little from the final published versions. However, the researchers failed to take into account the data involved with the entire process of preprint posting, journal submission, journal acceptance, and final publication.

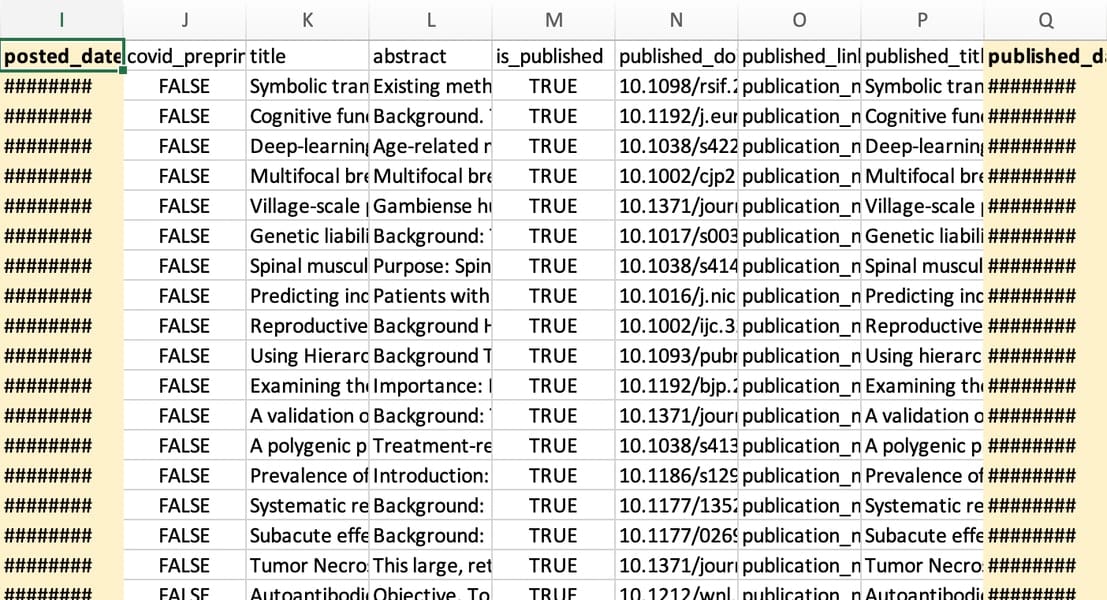

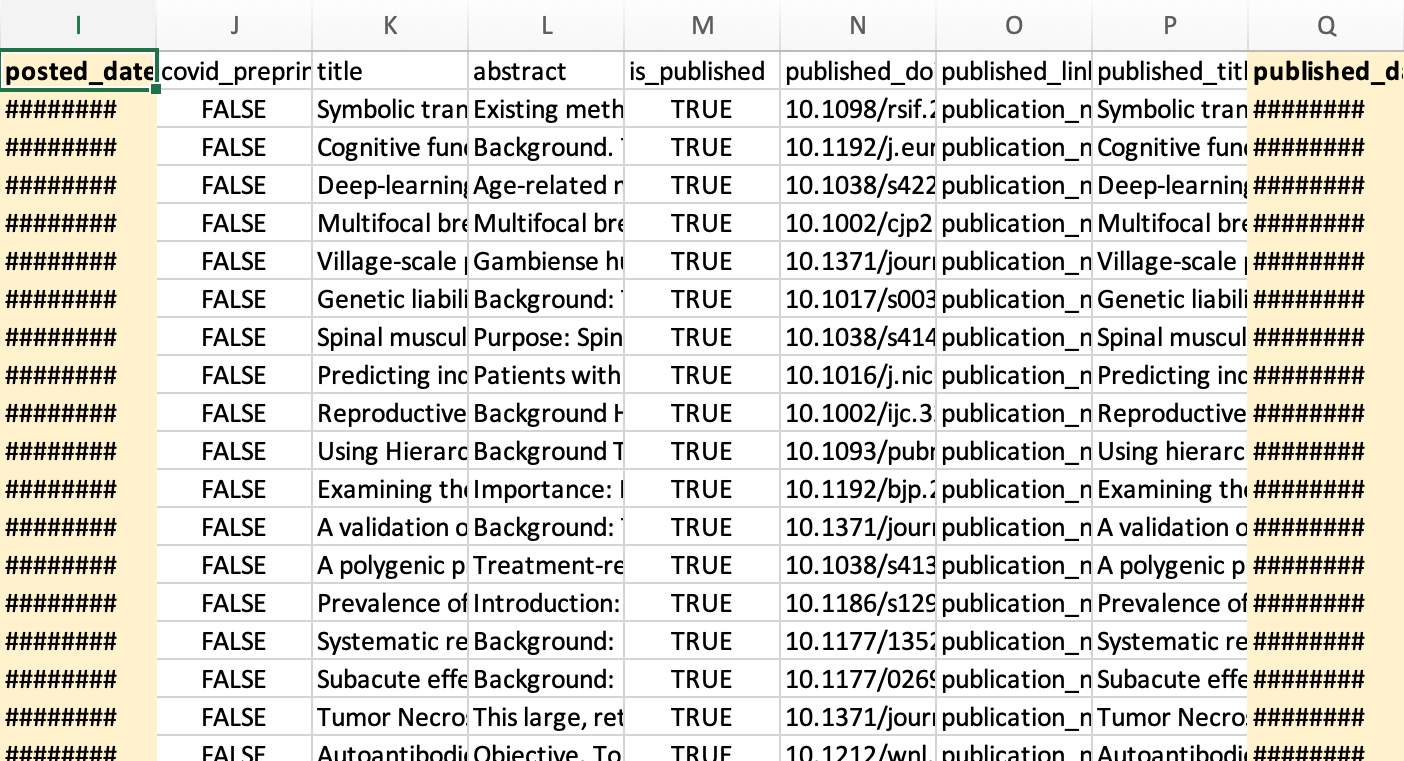

In addition to not taking into account the various stages a paper goes through as part of journal vetting and potential publication, they also appear to have obscured the dates they did have (data from supplementary materials, file “all_pairs_plos_study”):

There are other oddities in this file, such as the fact the authors claim to have analyzed 105 article pairs, but there are 187 non-excluded article pairs in the file.

Saved as a .tsv file in their dataset, a computer scientist thought maybe the hash symbols concealed hashed data. However, he confirmed these are textual hashes, nothing more.

Now, I don’t have all day to go through all 187 of these articles, but this is a pattern we’ve seen before — back in 2019, I found that 57% of 1,200 bioRxiv preprints related to papers in Nature journals were posted after submission to the journal, and often weeks later. For medRxiv preprints, this rate jumped to 84% in a similar, smaller analysis:

The average number of days that a preprint was posted after submission to the accepting journal was 88 days (nearly 3 months), with a range of 0-221 days. This is far longer than the time after submission authors on bioRxiv posted preprints in the Nature analysis.

As a result, when I saw that these authors never discussed the dates of posting compared to submission, revision, and acceptance, I thought I knew what they’d missed.

After testing a dozen preprint-paper pairs, I think I’m probably right.

Having to find these data manually, I went through the first dozen preprint-paper sets in their dataset. On average, they were posted 35 days after submission to the journal that ultimately published them — plenty of time to get initial editorial feedback and probably some peer-review feedback. Of the dozen, only four were posted upon or before journal submission.

The number of days between journal submission and preprint posting ranged from 3-175 (average, 35).

This suggests that one possible explanation for a lack of difference between posted preprints and published articles is that biomedical preprints reflect journal editorial feedback by the time they’re posted. Authors are cautious. It fits their profile.

The authors of this paper (which, I might add, is poorly written and therefore hard to follow) should go back, check all the dates involved with posting and journal editorial processes, and see what they think after doing all that extra work to verify their hypothesis.

Until then, I’m going to assume that they’ve missed a huge variable that could explain most of their observation in a way that makes the whole hypothesis collapse.

Note: After I published the first draft of this, I analyzed more papers — a total of 27 with preprints on medRxiv and 15 with preprints on bioRxiv. The average time between journal submission and preprint posting for the medRxiv preprint-paper pairs increased to 36 days, with 198 days passing in one case. For bioRxiv preprint-paper pairs (n=15), the journal submission occurred on average 4 days after preprint posting. However, in 1/3 of cases, the preprint was posted after journal submission (range, 1-97 days, average 23 days). Even in this “best case” mix, the authors’ conclusions are suspect, as journal review could have been incorporated before preprint posting for ~1/3 of the preprints involved.

Additional Note: PLOS promoted this study using a press release. One of the authors is the Executive Director of ASAPBio, creating a potential financial interest that is not disclosed.